|

UNESCO |

OECD |

EU

|

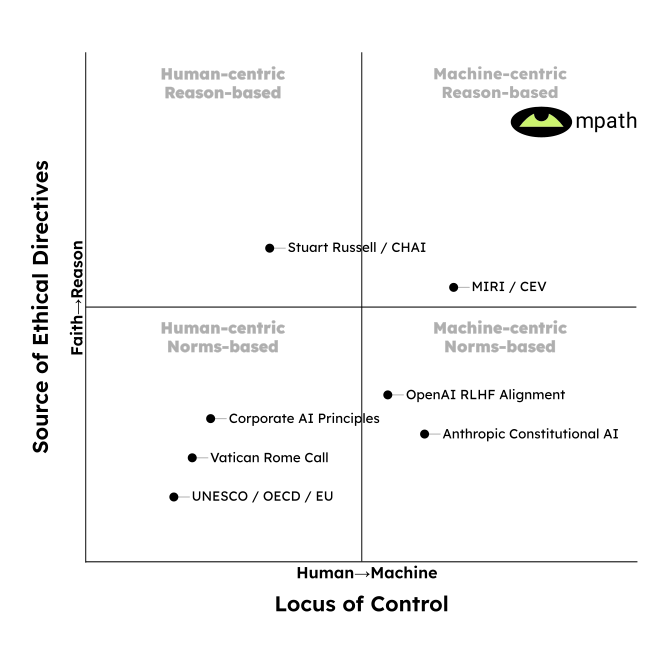

These intergovernmental organizations establish high-level, principles-based frameworks to guide member states, policymakers, and developers toward trustworthy AI that respects human rights and democratic values. |

Human Control: The guidelines are directed at human actors, including governments, corporations, and civil society, to shape policy, promote responsible stewardship, and ensure accountability in the design and deployment of AI systems. |

Norms-Based: The principles are explicitly founded on established international norms such as human rights, fairness, human dignity, transparency, and democratic values. |

| Vatican Rome Call |

The Rome Call is a pledge signed by major technology companies and institutions to promote an ethical approach to AI, calling for "algorethics" that serves every person and humanity as a whole while respecting human dignity. |

Human Control: The call is a commitment to foster a sense of "shared responsibility" among international organizations, governments, institutions, and technology companies to guide human action in the creation and use of AI. |

Norms-Based: Its six core principles—transparency, inclusion, responsibility, impartiality, reliability, and security/privacy—are explicitly designed to ensure AI serves and protects human beings and the environment. |

|

Corporate AI Principles (Google,

IBM,

Microsoft,

Meta, et al.)

|

Major technology corporations have established public sets of principles to guide their internal AI development, generally emphasizing values like augmenting human intelligence, fairness, accountability, and transparency. |

Human Control: These principles are internal corporate governance frameworks intended to guide the actions of the companies' employees, researchers, and product teams to ensure responsible development and deployment. |

Norms-Based: The principles are explicitly grounded in widely accepted societal norms, including social benefit, safety, privacy, non-discrimination, and the idea that client data and insights belong to the client. |

| Stuart Russell /

CHAI |

Stuart Russell proposes a new model for creating provably beneficial machines whose sole objective is to maximize the realization of human preferences, about which the machine is initially uncertain. |

Machine Control: The three principles guide human developers, but they describe a fundamental re-design of the AI's objective function and internal architecture to make the machine inherently deferential and controllable due to its uncertainty. |

Norms-Based: The machine's core purpose is to learn and realize our objectives by observing human behaviour, meaning it is designed to learn and align with human norms and preferences as revealed through data. |

| OpenAI RLHF Alignment |

OpenAI's alignment strategy relies on iterative deployment and techniques like Reinforcement Learning from Human Feedback (RLHF) to fine-tune models based on human-labeled examples of good and bad behavior. |

Machine Control: RLHF is a technical training process that directly modifies an AI's internal preference model and, consequently, its behaviour, based on the reward signals derived from human feedback. |

Norms-Based: The entire process is grounded in learning from human preferences, values, and explicit feedback, with the goal of making the model's outputs more helpful, safe, and aligned with user expectations. |

| Anthropic Constitutional AI |

Anthropic's Constitutional AI (CAI) is a method for training a harmless assistant by providing it with a written constitution of ethical principles, which it uses to self-critique and revise its own responses in a self-improvement loop. |

Machine Control: CAI is a two-stage training process (supervised and reinforcement learning) that directly embeds the constitution's principles into the AI's preference model, a method called RLAIF (RL from AI Feedback). |

Norms-Based: The "constitution" is an explicit list of human-written principles, many drawn from established documents like the Universal Declaration of Human Rights, which serve to codify existing human norms. |

| MIRI / CEV |

Eliezer Yudkowsky and MIRI's Coherent Extrapolated Volition (CEV) proposes that a Friendly AI's objective should be to determine and act upon what humanity would want if we were more knowledgeable, thought faster, and were more the people we wished to be. |

Machine Control: CEV is a specification for an AI's "initial dynamic" or ultimate goal system; the AI itself is tasked with performing the complex extrapolation process to determine the correct actions and values. |

Reason-Based: Instead of implementing current, flawed human values, the AI's task is to derive an idealized and coherent set of values through a process of logical extrapolation and reasoning about an improved version of humanity. |

| mpath |

mpath is preparing for a world where practically sentient AI has surpassed human intellectual capabilities. In this scenario, humans will no longer be able to rely on control mechanisms or appeals to moral authority to align human and computer interests. Our moral system will need to be built rationaly from self-evident first principles. |

Machine Control: mpath is preparing for the case, which it believes is inevitable, that humans will lose the ability to control AIs. |

Reason-Based: mpath's Tenets are built from a starting assumption of conscious self-awareness and ventures to build an ethical system that would be recognisable to humans and justifiable to computers, using reason alone. |